Network-Oriented Service Controls

My Ph.D. undertook a detailed investigation of network-oriented service

control (NOSC) mechanisms for providing QoS guarantees in Internet services.

NOSCs operate at the network and transport layers; thus, they require

neither OS changes nor difficult offline capacity analysis. Furthermore,

NOSCs can be easily integrated into network protocols, firewalls, and Layer-3+

load-balancers.

Because these control mechanisms are located on the path between the

client and the server, the applied control will affect both

the clients' future requests and the services' processing capacities. Central

to my research is establishing a fundamental understanding of how

arriving requests react to the applied control, and how applications react to

the change in the server's load. The combination of both views---the

client's and the service's---builds a complete picture detailing the

performance, effectiveness, and limitations of using NOSC for

controlling Internet services. My research methodology balances analytical

modeling with empirical validation. Analytical modeling provides greater

insight into the problem at hand, while empirical validation provides

evidences for real-world large-scale deployment.

Papers

Eve: A Scalable Network Client Emulator

Hani Jamjoom, Howard C. Tsai, Kang G. Shin and Sharad Singhal

10th Communications and Networking Simulation Symposium

Norfolk, VA, March 2007

On the Role and Controllability of Persistent Clients in Traffic Aggregates

Hani Jamjoom and Kang G. Shin

IEEE/ACM Transactions on Networking

Vol. 14, No. 2, April 2006

Re-synchronization and Controllability of Bursty Service Requests

Hani Jamjoom, Padmanabhan Pillai and Kang G. Shin

IEEE/ACM Transactions on Networking

Vol. 12, No. 4, August 2004

Persistent Dropping: An Efficient Control of Traffic Aggregates

Hani Jamjoom and Kang G. Shin

ACM SIGCOMM

Karlsruhe, Germany, August 2003

Adaptive Packet Filters

John Reumann, Hani Jamjoom and Kang G. Shin

IEEE GLOBECOM

San Antonio, Texas, November 2001

Design Objectives

Classic network-based QoS-management approaches focus on fair

allocation of network bandwidth for different flows. They are effective for

long-lived connections, e.g., multimedia streaming, where the primary

component of user-perceived latency is data transfer.

What has occurred with the advancement in Internet services and

improvement in server and network capacity is a shift in the time and

space granularity of requests: each request is now proportionally

shorter in time and requires less network and server resources. At the sane time, each

server must handle a much greater number of requests. Furthermore,

requests are becoming more correlated, as future requests depend

greatly on the successes of previous ones. A customer in an e-commerce site,

for instance, will first browse for a product, add it to a shopping cart, and

then check out. If NOSCs are to be effective in providing QoS support

and overload protection, they must correctly deal with how requests are issued

by clients and how servers process such requests. In

particular, three design objectives have influenced the development of

NOSCs:

o1. QoS Differentiation. QoS differentiation can be enforced across

two dimensions: service and client. For the former, a server that is

hosting multiple services may need to differentiate among the

levels of service that each is getting. In contrast, a server may also

need to differentiate between different client groups. In both cases, the

server must first classify incoming requests based on the

destination service or the originating client group. QoS differentiation

is then achieved by appropriately throttling incoming traffic (as a whole) to

match the server's capacity while giving higher priority (or weight) to

traffic flows belonging to different services or client groups---a similar

idea to Rate-Controlled Static Priority and DiffServ. Since

each service may react differently to the applied controls, NOSCs focus on

determining what and how much to control to reach their desired

goal.

o2. Minimize Client-perceived Latency. From a client's perspective,

delay is the most important aspect in the performance of servers and networks.

NOSCs, however, cannot reduce the processing delay of requests

inside an Internet service (because they do not modify the actual service). To

this end, NOSCs treat the server as a black box where it can

bound the client's delay using two approaches. First, it can enhance the

performance of existing controls before a request is admitted into service.

This is akin to using Random Early Drop (RED) as a replacement

for drop tail inside Internet routers to improve the controllability of long-

lived TCP connections. Second, it can perform a form of low-level

admission control, where new (connection) requests are admitted only if they

do not violate some performance criteria. This is similar to RSVP and

measurement-based admission control, but here server capacity is

the resource under control instead of network capacity.

o3. Improved Reaction to Overload. Overload protection is one of

the primary goals that motivate using NOSCs. Flash crowd events (FCEs) and

distributed denial of service attacks (DDoS) can easily overwhelm

even the most powerful servers. NOSCs are well-suited for providing this type

of overload protection since FCEs and DDoS attacks can be both detected

and mitigated at the network or transport layers. Therefore, appropriately-

configured NOSCs can act as the first-line of defense against server

overload.

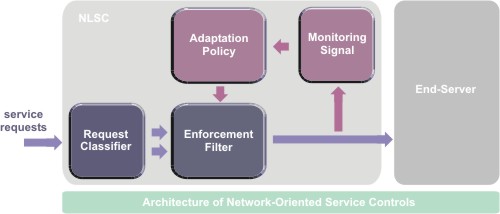

The high-level architecture of most NOSCs adheres to traditional control

models, which consists of a controller and an input to be controlled. In

NOSCs, network traffic, which is represented by arriving requests, is

the input. The controller refers to the software (or hardware) mechanisms that

change the behavior of the input as it is passed to the output. A controller

can be open or closed loop. In a closed-loop design, the controller

computes the difference between a target and measured output value, and uses

it to fine-tune its controls. Open-loop controllers do not feed back any

information from the output. More specifically, the controller can

consist of four basic components:

c1. Packet classifier splits incoming requests into different

classes based on client groups or destination services. Without this

classification process, all incoming requests are treated

equally. Therefore, the packet classifier is a crucial component for providing

QoS differentiation; however, it is not necessary for providing overload

protection.

c2. Enforcement filters specify how to control the incoming

traffic or requests. They generally have an open-loop design. Examples of this

component include random dropping and token buckets---both are

used to shape the underlying traffic to meet a specific transmission

rate. The enforcement components is referred to as filters since

they filter out nonconforming traffic.

c3. Adaptation policies describe how these filters are manipulated

based on specific input conditions. They provide the feedback loop for

implementing more complex control algorithms. For instance, a

rate-based dropping technique can increase the corresponding drop probability

based on the load on the server to force more TCP connections to back off,

thus reducing the arrival rate of incoming traffic.

c4. Monitoring signal is a key element in providing the adaptation

mechanism. Queue thresholds, such as overflow and underflow, are commonly used

to trigger the adaptation policy to take corrective actions.

Signals reflect the health of the system as well as the effectiveness of the

underlying controls.

Different designs and implementations of these four components give rise to

drastically different NOSCs. Part of my research is to look at different

design alternatives with the aim of finding those that are best

suited---in terms of performance, robustness, and implementation---for

controlling Internet services.

My Ph.D. Research Focus

My Ph.D. research followed a structured approach for studying the interactions

between applied controls, the resulting reaction from the

underlying traffic, and the change in client-perceived latency.

Located between the client and the service---either inside a router or at

the entry point of a server---the applied control has effects in two

directions. In one direction, it affects the client's traffic as the

controller decides what to let through and what to deny access. In the other

direction, the applied control also affects the performance of the server as

it dictates the maximum arrival rate (and possibly the distribution)

of requests into service. Therefore, my research studied both directions.

The exact problems are detailed below.

p1. Gap Between Request Arrival Models and Controls. The majority of

existing studies view request arrivals at an Internet

service as a train of uncorrelated events from the perspective of the applied

control. Such studies have looked at snapshots of flow

aggregates (under specific load conditions) to characterize the various

aspects of request arrivals (generally using an ON/OFF traffic model). While

this is useful in many aspects of networking research, their models

are too rigid to describe how request arrivals will change as different

controls are applied to incoming traffic. Describing the arrivals with a

Weibull distribution, for example, does not show how future arrivals

will be affected by an increase in the number of dropped requests.

Without this information, one cannot accurately predict the sensitivity of

arriving requests to the applied controls. This may result in having

a controller that oscillates between over- and under-enforcement. There

is then a need for a request arrival model that incorporates this crucial

feature. The new model must characterize the distribution of

request arrivals and how it will change with different enforcement

policies. This new understanding will not only improve the design of

future control mechanisms, but will also allow more realistic testing

before they are deployed in real systems.

p2. Inadequacy of Traditional Enforcement Mechanisms. The way that traffic

is controlled has remained relatively unchanged with

buffering or dropping (either randomly or using token buckets) as the

defacto mechanisms. Such mechanisms have proven their

effectiveness at controlling long-lived TCP connections. However, by adopting

them directly for controlling Internet services, they are assumed to also be

effective for short-lived requests---an assumption that must be

validated. What is needed is, thus, a rigorous investigation of the

traditional controls that evaluates their effectiveness, pinpoints their

shortcomings, and enhances their capabilities based on the new

understanding of the intrinsic behavior of arriving requests. Such new

mechanisms can provide improved performance for both the client and the

server.

New enforcement mechanisms are also needed. Application-level and OS-

level QoS mechanisms have relied on admission control to allow only those

requests that can be served while meeting their performance targets

without causing the violation of targets for previously-admitted requests.

Admission control tells the connecting clients that their requests will either

be admitted and served in a timely manner or rejected immediately.

The capability of explicitly rejecting requests is not fully available to

NOSCs as they operate at the IP level. However, NOSCs can greatly

improve both the controllability and client-perceived latency by

having a similar mechanism. Especially with large and unexpected request

bursts or flash crowds, explicit rejection can avoid repeated attempts by the

clients to gain access to the corresponding service, which may only aggravate

the overload situation.

p3. Search for the Optimality of Applied Controls.

The importance of determining the relationship of the applied control and

request arrivals is outlined above. There is, nonetheless, a greater need for

a deep understanding of how the control mechanisms (either the

traditional or newly-proposed) will affect the client-perceived latency.

This is especially necessary as each control policy has a wide range of

possible parameter settings. A brute-force study of all possible

control policies with all possible parameter selections is a daunting

task. By creating powerful (analytic) models that are capable of

expressing client-perceived delay as a function of the applied

control, the search from the optimal control policy is greatly

simplified. The result is the ability to choose the enforcement policy

that minimizes the network delay while meeting the desired control

targets.

p4. No Translation Between Network Load and Server Load.

Classic network control mechanisms (like WFQ and CBQ) have focused on the

fair partitioning of network resources among competing traffic. The

translation between network load and resource requirement, therefore,

is straightforward as the length of each packet is enough to calculate the

required buffer size, consumed bandwidth, and transmission overhead. When

NOSCs are applied to Internet services, this direct translation is no

longer valid as single requests can produce huge processing delays inside an

Internet service. This is particularly important for QoS-sensitive

services, where NOSCs provide a form of low-level admission control.

To appropriately control arriving requests, it is crucial to have enhanced

understanding---either through empirical or analytical studies---of

how these requests will be processed inside an Internet service. The goal

here is, thus, to provide a mechanism that translates between arriving

requests (or network load) to server load (or server resource

requirements). This capability can be used to appropriately configure the

NOSC policies, investigate the interactions between different services, and

study the true extent for which NOSCs can be used to provide QoS

guarantees/differentiation.

p5. Rigid Controller Architecture. Many of the existing NOSCs are

designed with a specific deployment

environment in mind. This rigidity hinders further deployment and

adoptability of these techniques, as there is a wide range of

services that may need specific controller customization. However, the

basic components of any NOSC are relatively simple and can be designed in a

modular way that allows a kind of plug-and-play approach for

providing service-specific customization. Combining easy-customization with

enhanced understanding and improved controls as outlined above will ensure

large-scale and cost-effective deployment.

Primary Contributions

My Ph.D. dissertation makes several key contributions toward advancing the

state of the art in using NOSCs. They are motivated by the issues outlined

earlier and are centered around the need for fundamental

understanding of the relationship between the applied control, the reaction of

the underlying traffic, and the resulting change in client-perceived

latency.

i. Traffic Analysis. Rigorous analysis of the effects of controls on incoming traffic to an

Internet service is performed. This is a crucial step for designing and

evaluating any network control mechanism. Based on this empirical study, the

persistent client model is introduced to capture how client

requests do not simply go away when dropped. Instead, there is a long-range

dependency between the applied controls and future request arrivals.

The proposed model is a departure from traditional approaches where client

models are based on observed traffic aggregates (mostly through traffic

traces). In contrast, this model is created after careful

analysis of a wide range of individual clients accessing different web

services. Combining the observations of different clients, a coherent picture

is built detailing the expected behavior of the aggregate behavior of

arriving requests.

With this new model, several unexpected aggregate behaviors in routers and

end-servers are unfolded during different load scenarios. In particular,

there was a surprising discovery of re-synchronization of dropped

requests. This has a strong implication on the control of bursty request

arrivals. Specifically, it shows that traditional traffic policing

mechanisms (e.g., Random Early Drop and Token Bucket Filters) often

underestimate the effects of the enforcement policy on the underlying client-

perceived latency.

Because this work relies on actual implementation and real measurements,

Eve---a scalable, extensible, and programmer-friendly tool that allows

for fast generation of clients to test high-capacity Internet servers

---is built. Eve is used to simulate thousands, or tens of thousands, of

persistent clients with very high accuracy. It is a crucial component in the

evaluation process as it integrates the proposed user-model with on-

line measurements.

ii. Optimal Drop Policy. Based on the proposed client model, an analytical model is developed to

relate the drop probability and the connection-establishment delay, which can

easily dominate the total client-perceived latency when accessing an

Internet service. This model is constructed such that it can be applied to a

wide range of drop policies. With this capability, the effects of different

drop policies are explored and persistent dropping, a simple

and novel mechanism that minimizes the client-perceived delay and the number

of retransmitted requests while achieving the same control targets as

traditional control mechanisms, is proposed. In particular,

persistent dropping achieves three important goals: (1) it allows

routers and end-servers to quickly converge to their control targets without

sacrificing fairness, (2) it minimizes the portion of client

delay that is attributed to the applied controls, and (3) it is both

easily implementable and computationally tractable. Two working

implementations of persistent dropping are implemented that can be

deployed in routers or end-servers.

iii. Reject Message Extensions for TCP Connections. As mentioned earlier, admission control, from the point of the network

stack, is a high-level operation that has been used extensively by QoS

mechanisms. There are many situations where it is more advantageous

to both the server and the client to perform this admission control before the

connection is fully established. After surveying possible solutions, it was

clear that there is no universal mechanism for providing admission

control at the IP level. A new IETF Internet standard is thus proposed for

extending the Internet Control Message Protocol (ICMP) to provide explicit

rejection capability. The choice of adding a new ICMP message, as

opposed to changing the TCP stack implementation, is motivated by the need for

a simple implementation that can be incrementally deployed. This new

capability is an effective way for server and router to deal with clients'

persistence. It also provides the client with immediate information, rather

than relying on timeouts to infer the server's state.

iv. Improved Traffic Prediction. Because incoming requests to an Internet service can be inherently bursty,

a new multi-stage filter is introduced to perform accurate traffic prediction

of future arrivals based on the applied control policy. Traffic

prediction was based on the persistent client model and was augmented with a

rejection mechanism to create the Abacus Filter (AF), a new traffic

policing mechanism. AF complements the persistent dropping

technique by better adapting to changing arrival patterns. The deriving

principle is to maximize the number of admitted requests subject to the

maximum acceptable network delay constraint. By modeling the

time of the next TCP retransmissions, AFs estimate the amount of available

future capacity and only admits portion of a burst that, with high

probability, will eventually be admitted. This way, bursty

traffic or a sudden jump in network load does not affect the overall delay of

successful connections.

v. Analysis of Impact of Control on Internet Services. The above

contributions focus on analyzing and improving request controls

inside the network. The picture is completed by rigorously analyzing the

effects of controls on the corresponding Internet services. Here the

multi-threaded round robin (MTRR) server model is introduced to analyze

the internal dynamics of a typical Internet service. This model is a

generalization of existing time-sharing process models and is more

representative of a wide range of current service implementations. For this

model, powerful, yet computationally-efficient, mathematical relationships

are developed that capture three important elements: (1) the effects of

concurrency on the performance (in terms of throughput and response time) of a

service, (2) the interactions between threads/processes of

different services, and (3) the relationship between requests arrival

and service performance.

This analytical model is general enough to describe the interactions

between the applied controls and multiple services sharing the same

machine. In particular, it is used to address important issues

in the design of effective QoS mechanisms: (1) predict the true impact

of the applied control---expressed by the maximum allowed request arrival rate

---on the client-perceived latency, (2) estimate the

corresponding performance of all running services, (3) describe the

stability criterion of the underlying server, (4) find the rate limits that

guarantee certain response times to different client groups (e.g.,

paid customers are given preferential treatment over the non-paying ones).

vi. Integrated Adaptation Mechanism.

Combining the results from the above contributions, a general architecture

is designed for integrating signaling information with dynamic control.

This mechanism, which is called Adaptive Packet

Filters (APFs), requires minimal OS modifications to facilitate its

deployment and maximize its portability. It uses a simple and robust

technique that can provide immediate reaction to varying load

conditions. An APF implements its components as plug-in modules. This

allows it to easily integrate new control filters (i.e., persistent dropping

and abacus filters). The current implementation targets Layer-4

network devices and shows full overload protection as well as service

differentiation.